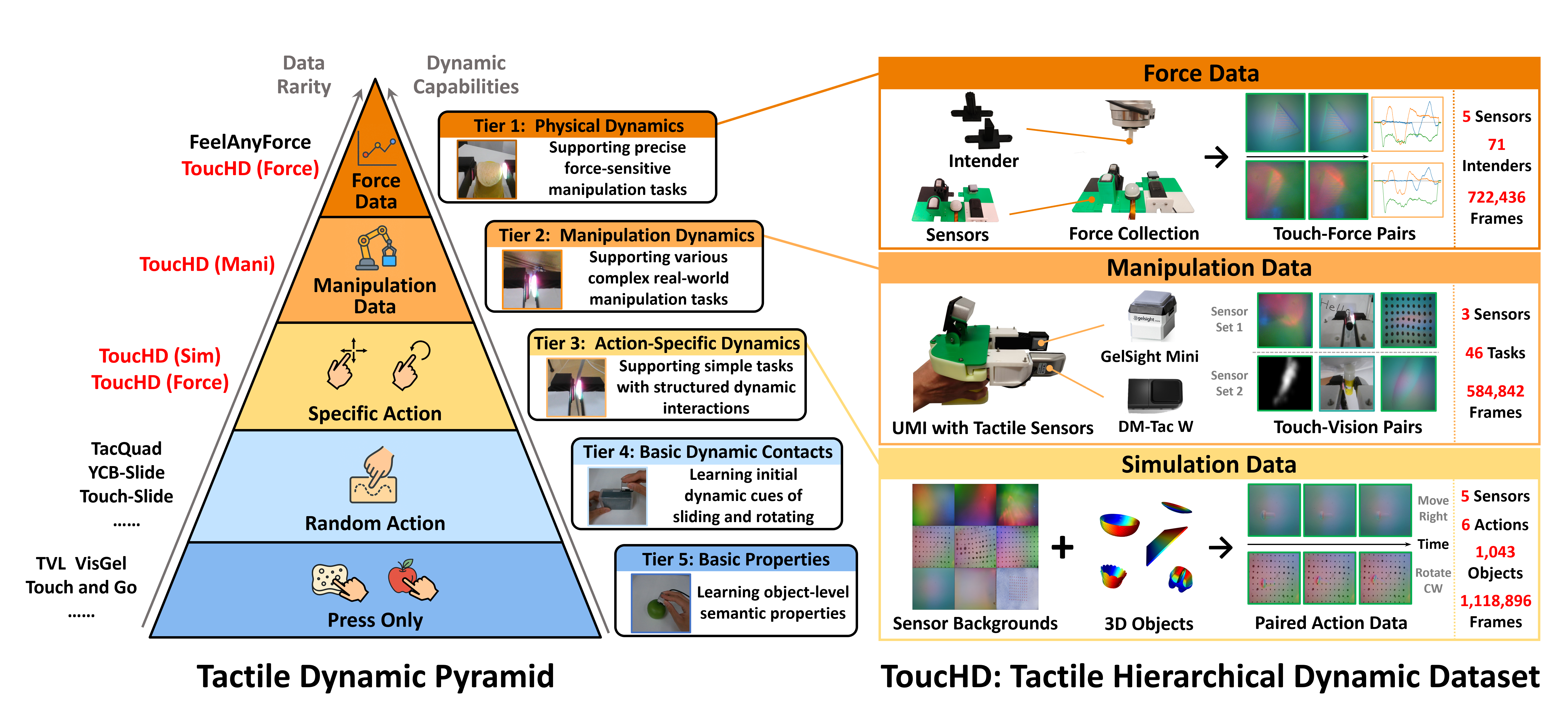

(ICLR 2026) AnyTouch 2: General Optical Tactile Representation Learning For Dynamic Tactile Perception

🚀 Welcome to the repo of AnyTouch 2! If our project helps you, please give us a star ⭐ on GitHub to support us. 🙏

- Quick Start Demo Code

- Dataset Pre-processing

- Sparsh Evaluation Code

-

Create Environment

conda create -n anytouch2 python=3.9 conda activate anytouch2 -

Install PyTorch 2.4.0 + Cuda 12.4

pip install torch==2.4.0 torchvision==0.19.0 --index-url https://download.pytorch.org/whl/cu124 -

Install other required packages:

git clone https://github.com/GeWu-Lab/AnyTouch2.git cd AnyTouch2 pip install -r requirements.txt

-

Download AnyTouch 2 Model Checkpoints into

checkpoints/(Complete the form to get access first)huggingface-cli download --repo-type model xxuan01/AnyTouch2-Model --local-dir checkpointsCheckpoint Performance:

TAG Cloth Slip / Delta Force (Sparsh) Force (Sparsh) Force (ToucHD) num_frames stride Acc ↑ Acc ↑ F1 Score ↑ / RMSE ↓ RMSE ↓ RMSE ↓ 4 2 76.97 42.31 86.66 / 87.80 (DG)

97.96 / 80.83 (Mini)624.26 (DG)

202.14 (Mini)894.32 (DG)

1051.03 (Mini)2 6 74.15 40.76 86.60 / 83.15 (DG)

97.85 / 89.21 (Mini)643.91 (DG)

208.41 (Mini)1076.33 (DG)

1311.27 (Mini) -

Run

quick_start.sh(Coming Soon)bash scripts/quick_start.sh

-

Download ToucHD (Force) (Complete the form to get access first), Touch and Go an Cloth into

datasets/### Download ToucHD (Force). Please complete the form to get access first. huggingface-cli download --repo-type dataset xxuan01/BAAI/ToucHD-Force --local-dir datasets -

Pre-process the datasets (Coming Soon)

-

Run scripts to start downstream training and evaluation

bash scripts/run_probe_tag.sh bash scripts/run_probe_cloth.sh bash scripts/run_probe_touchd.sh

-AD1C18.svg?logo=arXiv)